Additional Information

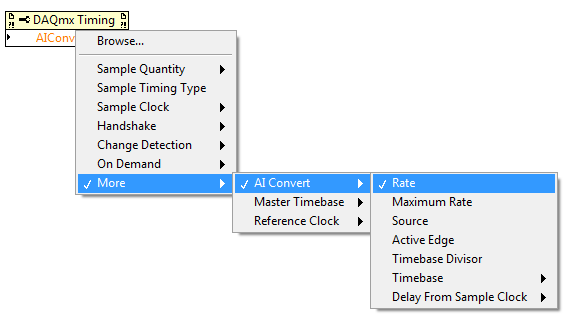

To set the AI Convert Clock rate to a specific value in LabVIEW, use a DAQmx Timing Property Node. Within this property node, set the property to

AIConv.Rate by navigating to

More»AI Convert»Rate (please see image below).

Please note that the

AIConv.Rate property must be at least as high as the

SampClk.Rate(Sample Clock Rate) property times the number of multiplexed channels in the scan. Otherwise, a DAQmx error will occur at run-time.